Support for Drupal 7 is ending on 5 January 2025—it’s time to migrate to Drupal 10! Learn about the many benefits of Drupal 10 and find migration tools in our resource center.

Support for Drupal 7 is ending on 5 January 2025—it’s time to migrate to Drupal 10! Learn about the many benefits of Drupal 10 and find migration tools in our resource center.In #2931611: Honeypot key value entries never expire (effectively), a bug which allowed an infinite number of honeypot_time_restriction entries to be written was fixed. However, for any Drupal 8 sites running Honeypot prior to the version with that fix, there are likely at least hundreds, if not thousands or more rows in the database that are set to never expire.

An update hook should be written which deletes all entries without an expiration set. The update hook could take a long time to run for some sites with a huge number of entries, so it may need to process them using the batch api.

| Comment | File | Size | Author |

|---|---|---|---|

| #57 | 2997609-test-only.patch | 993 bytes | claudiu.cristea |

| #43 | batch-operation.png | 18.21 KB | pfrenssen |

| #43 | confirm-form.png | 36.35 KB | pfrenssen |

| #43 | status-report.png | 25.99 KB | pfrenssen |

| #26 | 2997609-26.patch | 1.94 KB | Manuel Garcia |

| |||

Issue fork honeypot-2997609

Show commands

Show commands

Start within a Git clone of the project using the version control instructions.

Or, if you do not have SSH keys set up on git.drupalcode.org:

Comments

Comment #2

C-LogemannWe have a customer system where we already have more than 6,7 million honeypot entries blowing up the DB with some GB. I will create a direct database command to delete. But I agree that there should be a solution for all the unneeded honeypot data on all systems.

Comment #3

geerlingguy CreditAttribution: geerlingguy at Midwestern Mac, LLC commented@C_Logemann - Thanks for the information! Once you have that DB query, could you share it here in case someone else needs to do the same thing until this update hook is written?

Comment #4

C-Logemann@geerlingguy Yes I already planned to share my SQL-Snippit when it's tested.

What's your Idea for the clean up at the update hook? Maybe also a database call for deleting all honeypot entries with too long time to expiration in key_value_expirable? This is something I can develop on upcoming Drupal Global Contribution Weekend January 25-27, 2019.

Comment #5

C-LogemannAll following SQL-Codes are wrapped in drush 8 commands.

It's good to find out how many table rows you have to decide what to do next. Maybe we can also analyze the data in the update hook and maybe do not delete all at once if the value is to high (see below):

drush sql:query 'SELECT collection, COUNT(*) AS num FROM key_value_expire WHERE collection = "honeypot_time_restriction";'Because all "expire" values are identical in my case I removed an additional condition to filter time based. But if you like to operate more granular you can calculate a unix timestamp and use it in this way:

drush sql:query 'SELECT collection, COUNT(*) AS num FROM key_value_expire WHERE collection = "honeypot_time_restriction" AND expire > "1147483647";'Before you decide which strategy you want to use to delete it's better to test it because it can take a long time to delete and this can maybe affect the normal usage of your system.

In our case the following command was running some minutes. So I decided to not use on the production system but it was helpful to use on the dev system to analyze the data. Because of this performance impact I think we need to warn people if we put this in an update hook. It should only be used with drush without php time limit and decoupled from SSH connection (screen, tmux, byobu ...):

drush sql:query 'DELETE FROM key_value_expire WHERE collection = "honeypot_time_restriction";'After analyzing the data which were not deleted with the command above I decided to truncate the complete table because this was running much faster and was freeing disk space very fast.

(Edit:) Maybe take a look at this Post in "Drupal Answers": https://drupal.stackexchange.com/questions/221985/what-is-the-purpose-of....

But this is nothing for an update hook and should only be used if you can loose other data in the table "honeypot_time_restriction" which is not from the honeypot module:

drush sql:query 'TRUNCATE TABLE key_value_expire;'Comment #6

AnybodyWhao key_value_expire grew up to 2,3 GB with honeypot entries on a customer site... So this is still quite important. ;)

Comment #7

Manuel Garcia CreditAttribution: Manuel Garcia as a volunteer commentedHad a go at this, turns out we cant do it in batches, as far as I know - though let me know if you have ideas:

key_value_expiredoes not have any id-like columnsdeletequeriesSo here is the update hook deleting all entries with an expiry date older than what we set by default. I also removed an unused use statement while I was at it :)

Comment #8

Manuel Garcia CreditAttribution: Manuel Garcia as a volunteer commentedactually, this should be a

<I believeComment #9

geerlingguy CreditAttribution: geerlingguy at Midwestern Mac, LLC commentedIt looks like this adds 3 new coding standards issues... https://www.drupal.org/pift-ci-job/1265461

Comment #10

Manuel Garcia CreditAttribution: Manuel Garcia as a volunteer commentedAddressing #8 & #9

Comment #11

Manuel Garcia CreditAttribution: Manuel Garcia as a volunteer commentedForgot one.

Comment #12

AnybodyWonderful, @Manuel Garcia, RTBC+1 for #11. Works perfectly for me. Thanks!!

Comment #13

C-LogemannThe current patch has no solution to handle situations where we have to many wrong entries in database. There is a big risk that this update hook can fail in such situations especially when triggered by update.php. So I strongly recommend to add a strategy for communication with site admin before starting a big cleanup. Maybe it's better to move the cleanup thing away from update hook to main code with an UI e.g. a warning and a corresponding "cleanup link" on the status page.

Comment #14

SpokjeAdded a patch that uses the Batch API deleting the incorrect/never expiring entries for honeypot.

If there are a _lot_ of those entries, this update will take a _long_ time, but it _shouldn't_ take down the site doing the cleanup.

I think the decision to do this to the UI as suggested in #13 could be better, we (with around 20 D8 sites) managed to get away with the batched

hook_update_Napproach as in the patch, since we didn't manage a D8 site with "mega enormous" amounts of the mentioned entriesComment #15

SpokjeHiding previous patches, since they _will_ take a site down due too DB becoming unresponsive upon running update.

Comment #16

SpokjeNote to self: first make sure you uploaded the correct patch, and then click 'Save'...

Also another extra reason to do this in an UI operation: Since triggering a hook_update (at least through the command line with drush) puts the site automatically in Maintenance Mode, and this can be a _long_ update, the Maintenance Mode "down time" can be more than just annoying.

Comment #17

SpokjeSorry, incorrect

indexline in previous patchComment #18

geerlingguy CreditAttribution: geerlingguy at Midwestern Mac, LLC commentedThe proper way to do this cleanup is via update hook, though—if we don't automate it or leave it to the site owner to do it, in 90%+ of cases it will never get done... I haven't had issues with batch API on a well-tested update hook on many different sites, often operating on hundreds of thousands or millions of database rows.

Maybe I'm missing something major, but what is the main risk with this being in an update hook?

Is that true? I don't believe running updates via

drush upor even via Drupal's update.php ever puts the site into maintenance mode, unless you explicitly do that _before_ the updates...Comment #19

Spokje@geerlingguy

QFT

Same here, I just did an update with the patch in #18 to wipe out around 525.000 rows, took around 10 minutes, site behaved normally during the whole time.

That would be the only caveat I can see.

Also, I never noticed this before, but seeing this hook_update_N took much longer than the "normal" ones, the reason for not noticing could be that "normal" ones are finished before my normal browser-refresh to check on the site is done.

I couldn't remember seeing a CR for this so I did some research:

Turns out when using the Batch API in a

hook_update_Nand using drush, Maintenance Mode is triggered: https://drupal.stackexchange.com/a/270795So basically I would say:

hook_update_Nshould be the way to go, batching seems the only way to keep the database from choking whilst doing the purge, so my patch in #18 seems, at the very least IMHO, the correct way of tackling the purge.Comment #20

Manuel Garcia CreditAttribution: Manuel Garcia as a volunteer commentedGlad to see there's a way about batching this, nice work figuring that out.

The proposed batch update is going to be very slow since it does a delete query per row. This would be solved by using LIMIT on the delete query per batch, but unfortunately since Postgres does not support his feature, neither does Drupal.

Comment #21

Spokje@Manual Garcia

I agree on the slowness, but I, as you did, found out there's no LIMIT available on a DELETE query in Drupal.

I sadly think, this is the fastest way that is possible with the tools available, but would love to be proven wrong.

Comment #22

gambryI'm not sure - but I presume - using

DELETE WHERE name IN ([..])query is faster than looping and querying 500 rows per batch?i.e.

It runs in ms on mine, so probably the limit can be bumped up to 1k. And I presume even higher.

UPDATE: to optimise and speed up the delete query we can at this point also remove the

->condition('expire', 2147483647)bit, as the condition already ran above and the primary key for the table is collection + name.Comment #23

jwilson3These unrelated lines should be removed from this patch.

See https://www.drupal.org/node/2774931

Comment #24

jwilson3Comment #25

marthinal CreditAttribution: marthinal at Bluespark commentedRerolled

Comment #26

Manuel Garcia CreditAttribution: Manuel Garcia as a volunteer commentedThanks for the rerroll @marthinal

I had a thought about what @gambry said on #22 and to me it makes a lot of sense, so I went ahead and updated the patch to use that approach. I've not actually tested this so please kindly do so on your environments and report back :)

Comment #27

C-LogemannTriggering the maintenance mode is not a caveat for me because it's recommended to use maintenance mode when running updates (e.g. mentioned @ /core/UPDATE.txt). Especially site where user data are collected a backup (after activating maintenance mode) is highly recommended. So admins can switch back when an update fails. I just had this situation some days ago.

Comment #28

geerlingguy CreditAttribution: geerlingguy at Midwestern Mac, LLC commentedI'm willing to merge this into the next release if I can get someone to review it with a live site's backup and make sure it doesn't cause any weird problems on running it.

Comment #29

aleksipI tested the latest patch (#26) on a real production site (Drupal 8.8.1, Honeypot 1.30). The size of the

key_value_expiretable was 1,101 MB, and it contained 4,059,873honeypot_time_restrictionentries that needed to be deleted.The update did put the site in maintenance mode. It took 3 hours 46 minutes on a relatively modest DigitalOcean Droplet setup and finished successfully. The size of

key_value_expirewent down to 5.55 MB.The patch looks fine to me and seems to work as intended so marking as RTBC.

Comment #30

Manuel Garcia CreditAttribution: Manuel Garcia as a volunteer commentedThanks @aleksip for reporting on that, great to hear it worked fine for you.

(re #29) I think 3h and 46min is a very long time to execute an update hook. I'm guessing we could do much better here as @gambry mentioned in #22. We may want to bump this to 1000 or even higher.

Any chance you still have that DB before running the upgrade @aleksip? - would be great to test if it runs fine with 1000 or 2000 etc.

Comment #31

geerlingguy CreditAttribution: geerlingguy at Midwestern Mac, LLC commentedYikes; deleting 1 GB of db records shouldn't take 3 hours—I agree a larger batch size may be appropriate, at least 1000, maybe even like 5000? It's a matter of playing around with RAM usage vs time per batch.

Comment #32

gambryIf it tooks 226 min with 500 deletions per batch, increasing this to 5000 (x10) should reduce that time to about 22min, which is much shorter, but still an eternity for some websites to stay in maintenance mode.... have we investigated the possibility to do this cleanup in a cronjob?

Another option could be to make

$deletable_honeypot_time_restriction_entries_per_batchconfigurable, i.e. using Settings or State API. We can set a default of 1000 (about 2hours) or 5000 (about 22min) and site managers can test the batch on stage websites and decide an alternative better value. I presume websites not accepting 22min as downtime have servers which should easily support 7.5k - 10k$deletable_honeypot_time_restriction_entries_per_batch.Comment #33

geerlingguy CreditAttribution: geerlingguy at Midwestern Mac, LLC commented@gambry - We could also, in the release notes for the next version of the module that has this fix, add a warning like "if you would like to avoid having your site in maintenance mode for a while, you can manually remove all the entries using this process: (link here)." That might sidestep the issue entirely.

Comment #34

gambry@geerlingguy that is a nice option we could do anyway. But I'm wondering how many site-managers follow the best practices of reading Release Notes before upgrading a module? 🤔

Also an additional option - which could sidestep the issue entirely AND more likely to be viewed by site-manager - is to add a

hook_requirements()which querieskey_value_expiretable and if any "bad" record is found it raises a warning or error and links to a documentation page, or shows directly the query to clean up the table.Then the work done in here can still be added:

a. as a API/helper for anyone to extend and use it

b. as a custom batch form, for not-technical site-managers

c. as a cron job task, site-managers can opt-out/opt-in from.

Thoughts?

Comment #35

Manuel Garcia CreditAttribution: Manuel Garcia as a volunteer commentedI think we should go MVP on this and write first the update hook to clean up people's sites before they run into (more) trouble.

We can always do follow ups if people are interested in the ideas on #34.

Comment #36

jonathanshawThe point of #34 is to not use an update hook at all, but to flag the problem and make the solution opt-in. It seems like a good direction to me. People won't read the release notes, they will see their site is offline for some time, the will try to restart their server part way through the update, the issue queues here will get flooded with various kinds of weirdness...

Hook_requirements and a custom batch form might be safer.

Comment #37

geerlingguy CreditAttribution: geerlingguy at Midwestern Mac, LLC commented@gambry - I think I like your suggestion, specifically:

We could just put the functionality into a helper function and say:

(Not that exact command/name, but basically if someone can just run one drush command (or one database query to run), it would be sufficient.

Comment #38

PapaGrandeI agree with this approach and pulling the code out of an update hook.

As a benchmark, I changed

$deletable_honeypot_time_restriction_entries_per_batchto 10000, and randrush updatedbon a Pantheon Multidev server. 7584423 rows were removed in 6 minutes 53 seconds.If I had deployed the update unawares and a production site was in maintenance mode for almost 7 minutes, I'd have probably had an aneurysm.

Comment #39

pfrenssenI'll do an iteration on this. I'll start by implementing the hook_requirements() entry.

Comment #40

pfrenssenSwitching to the latest version so I can make an issue fork.

Comment #42

pfrenssenCreated a batch process that can be run in the UI through a link in a

hook_requirements()warning in the status report. I did several tests and indeed the higher the number of items deleted in a single batch the faster the process goes. I settled on 50000 records per batch which matched roughly to the refresh time of the batch UI on my machine. If I put it any lower it processes multiple batches per page refresh. RAM usage seemed OK, we are retrieving 50000 integer values from the database after all, these don't take so much space.Next step is to create a drush command to trigger the batch process.

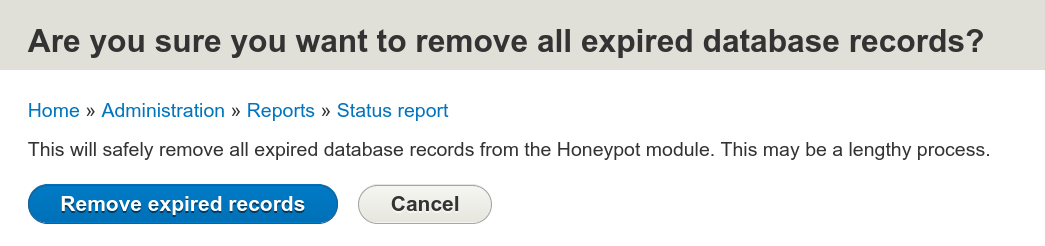

Comment #43

pfrenssenHere's how it looks in the UI:

Status report:

Confirmation form:

Batch operation:

Comment #44

pfrenssenAdded a drush command that triggers the batch cleanup, it goes something like this:

Comment #45

pfrenssenComment #46

idimopoulos CreditAttribution: idimopoulos for European Commission and European Union Institutions, Agencies and Bodies commentedTo get the easy part out of the way, code looks fine. Tested the UI part and works fine. Status report reports fine.

I must say I don't really enjoy this approach. We are having some stale data and we are creating a form to delete them. This form is a one time form which we will have to ditch later as it only works on records with expiration time set to 2147483647. But then again, we don't know when to remove it because we cannot know when everyone is going to remove the stale data.

However, given

A) the comments starting from #34

B) I tried to manually expire the records on our side. It seems that the system_cron is the one taking care of the expired records but not in batches. system_cron will attempt to delete them all at once. Since key_value_expire is a standalone table (not a fieldable entity or anything complex), it is quite fast, but not fast enough nonetheless. It took me 331 seconds for < 3m records to be deleted (I also had XDEBUG enabled).

C) Creating a different cron run would more or less do the same thing - would work on stale data until no more stale data exist and then would be called forever without purpose.

I am going to give the approach a green.

Comment #47

joseph.olstadwe have 225 megabytes in this table .... just noticed!

***EDIT*** our entries were actually from access_unpublished

Comment #48

joseph.olstadPatch 26 ok, but latest merge request doesn't provide a drush command for drush 10.x

does not work with drush 10.x

so I'm going with patch 26 instead as a temporary workaround.

Comment #49

joseph.olstadOOPS,

honeypot was not enabled, that's probably why I couldn't find the drush command

need to review

Comment #50

gambryI think this was RTBC before your comment @joseph.olstad?

Move it back if I'm missing something.

Comment #51

joseph.olstadyes the drush command seems to work fine, thanks, my problem was actually access_unpublished expired keys, not honeypot.

Comment #52

loopy1492 CreditAttribution: loopy1492 commented@joseph.olstad I think I'm having the same isssue. Did you just use the same command except use 'access_unpublished' instead of 'honeypot'?

Comment #53

AnybodyAny active maintainer? Would anyone like to contact the maintainer and tell him about this issue or offer Co-Maintainership?

Comment #54

TR CreditAttribution: TR commented@Anybody: WTF is that supposed to mean?

This may be RTBC, but it's a terrible solution, which is acknowledged by the person who RTBC'd it above.

It's great to have a patch here for those who need to use it for a one-time cleanup, but IMO it's not something that should be added to Honeypot permanently.

Comment #55

Anybody@TR: No reason for strong language. After reading my comment again, I totally understand that it was too short, and should point out why I thought it would make sense to have a maintainer look at this as it's RTBC. But still doesn't justify your unfriendly reply.

I read the comments, had a look at the status and had the impression it would be useful to have a maintainer have a look at this. And it seemed to me, that there was no maintainer activity in this issue since a longer period of time.

So let's set this back to NW, as it should be, as of your unfriendly comment. Anyway, thanks for your reply... but please let's stay friendly.

To be honest, it was just meant to ask for help with Maintainership, but as I see now, you propably read it as criticism.

Comment #56

claudiu.cristeaIf I'm running

drush core:requirementsimmediately after a clean site install, I'm getting:The

key_value_expiretable is created on demand. This explains the failure.Comment #57

claudiu.cristeaTrying again the "test only" as a patch.

Comment #58

TR CreditAttribution: TR commented