Support for Drupal 7 is ending on 5 January 2025—it’s time to migrate to Drupal 10! Learn about the many benefits of Drupal 10 and find migration tools in our resource center.

Support for Drupal 7 is ending on 5 January 2025—it’s time to migrate to Drupal 10! Learn about the many benefits of Drupal 10 and find migration tools in our resource center.| Comment | File | Size | Author |

|---|---|---|---|

| #23 | remaining_items.patch | 1.23 KB | slasher13 |

| #16 | simple_sitemap_queue_api_cron.patch | 13.02 KB | angela_G |

| |||

Comments

Comment #2

oxrc CreditAttribution: oxrc as a volunteer and at Adyax commentedHey there,

I'll be working on a Queue API for cron integration, hope to have it ready in a couple of days. Putting this on myself until then. I'll be working atop of 2.10 code version, because that's what we use in our project.

NOTE: It'll be my first delve into Queue API, so I'll be happy for any comments after the patch is ready.

Regards, Radu.

Comment #3

gbyte CreditAttribution: gbyte as a volunteer and commentedThanks for stepping in. It would be great however if you could work on top of dev; the codebase is cleaner, it is very stable and this would spare loads of effort duplication. I will be releasing v11 in the next few days anyway, so you guys can switch to v11. ;)

Comment #4

gbyte CreditAttribution: gbyte as a volunteer and commentedI've been thinking, definitely go with the dev version.

I think the simplest approach to not rebuild the whole logic would be probably to use the state API and expand the plugin functionality.

For example let the generator plugin that is currently generating links discover when batch_process_limit is reached (in setProgressInfo()?) and then provide its identification (plugin ID) and some kind of information about the current link being processed (in case of entity plugin, it would probably be the entity type and entity ID, in case of a custom link it would just be the numeric key) to the Drupal State API. One way of doing that would be for getBatchIterationElements() to set

$this->context['sandbox']['progress']and$this->context['sandbox']['current_id']from the state API before doing anything else.Then when a new generation is started, the state API has to be checked first to see if the generation process was stopped during last generation and the new generation process has to somehow continue from the last link using the state APIs info about the processing plugin and last link processed. If that's the case, generation has to be fast-forwarded to the correct generator and then to the correct link ("data_set").

These are just initial thoughts, maybe they will be helpful. I haven't investigated using queue API at all yet, as I have never used it. Maybe there is a better way?

Comment #5

oxrc CreditAttribution: oxrc as a volunteer and at Adyax commentedHey,

I'll be using dev then. I've been looking into this issue for some weeks now (first on the 2.1 version of simple_sitemap, because we had it erroneously connected, now with 2.10), so for the time being I see no better approach to make this work with cron.

Thanks for the tips in regards to the progress and how to solve its failures. I hope to have a first verision of the patch running by the end of today/tomorrow.

Comment #6

oxrc CreditAttribution: oxrc as a volunteer and at Adyax commentedA small update - I'm still working on this, switched to latest stable version (working atop of it).

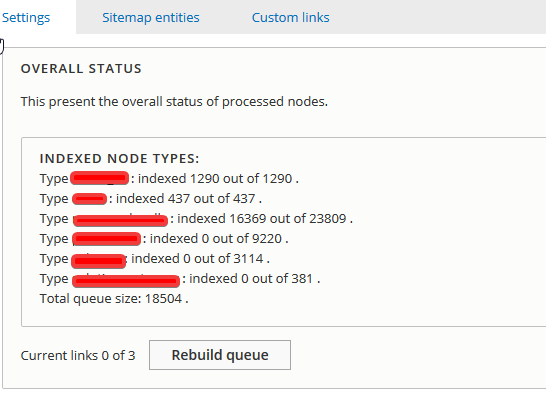

Wanted to share some changes to the overview form that I had to do in order to visualize what's going on.

So next step - getting the plugins to play nice with the Queue API, then I'll be cleaning up the code because it's a mess as it is now.

I don't know if you'll be able to utilize all of the work I'm doing for our client right now, the thing is we'll be implementing some stuff that might go into another issue for simple_sitemap module. Will keep you posted.

Comment #7

gbyte CreditAttribution: gbyte as a volunteer and commentedThis looks like a good initial effort. I am looking forward to a patch!

FYI The plugin implementation has changed slightly in dev to what was published in 2.11, as I implemented the possibility to override the entity generator plugin for certain entity types. We need it to alter menu_link_content entity generation (see #2865973: links.menu definitions are not added to the sitemap).

I will look closely into any changes you implement; if possible please create feature requests along with patches in separate issues, this way we will all profit from the work you are doing for your client.

Comment #8

michaelfavia CreditAttribution: michaelfavia commentedoxrc: able to share that patch? we need to implement this ourselves for a large site and it would be nice to have a strong starting point or to get it merged upstream. Thanks!

Comment #9

gbyte CreditAttribution: gbyte as a volunteer and at gbyte commentedComment #10

gbyte CreditAttribution: gbyte as a volunteer and at gbyte commented@oxrc Any code you could publish we could finish up? Thanks mate.

Comment #11

angela_G CreditAttribution: angela_G at EPAM Systems, Wolters Kluwer commentedWe also faced timeout errors during sitemap generation via Cron on a large site.

If there is some work done already or any plan how to develop the changes, I am also ready to work on this issue.

Comment #12

gcbMarking this major, as it causes pretty significant usability problems on any site with a significant amount of content.

Comment #13

gbyte CreditAttribution: gbyte as a volunteer and at gbyte commentedIt's a pity @oxrc was not able to share his work so far.

I am posting just to tell you guys that as of today I have begun working on this issue. It is a major redesign of the module's inner workings, so please be patient.

Comment #14

wellsThanks for your efforts, @gbyte.co. I have also being quietly watching this issue would be happy to collaborate if you get to a point of breaking things out in to smaller tasks.

Comment #15

othermachines CreditAttribution: othermachines commentedThanks from me, as well. Happy to help test when we get to that point.

Comment #16

angela_G CreditAttribution: angela_G at EPAM Systems, Wolters Kluwer commentedHere is a patch that uses Queue API to generate the sitemap via cron.

It is a quick fix without redesigning the whole module.

It can be used while other more general approach is ready and (maybe) can be a starting point for the general approach.

Comment #18

gbyte CreditAttribution: gbyte as a volunteer and at gbyte commented@everyone I spent the last few days implementing this. Both manual (batch) generation as well as backend/cron (queue) generation use the same queue source now, so one can continue generating any time without having to start over. Now sitemap variants get published only after they have been completely generated. This way the old sitemap is reachable during the generation period of the new one.

Worked for me with 200 000 entities with

Maximum links in a sitemapset to 2000 andSitemap generation max durationset to 10 seconds. Please test the newest 3.x and comment (don't forget to run update.php).I will gladly accept patches that improve performance of this code. It still takes a while to generate hundreds of thousands of entities. Also tests need to be adjusted, I would really appreciate if you could fix tests. :) 3.1 will be a great release.

@angela_G, thanks, but the 'general approach' was already being worked on when your patch arrived, so couldn't use it.

Comment #20

wellsThanks again, @gbyte.co for getting this started. I have tested a bit with an instance with about 16k entities and the default settings and cron is no longer running out of memory: yay!

I do still see memory errors if the

Sitemap generation max durationsetting is too high (I ran out of 512MB allowed memory after processing about 10k entities with a 10 minute limit). For my situation at least, it seems that five minutes is still a safe bet. Overall it takes about 15 minutes worth of work to process 16k entities (on a slow dev machine). What kind of performance did you have with your 200k entities test?Comment #21

wellsI have the 3.x-dev branch running on a production instance now. The site cron runs via system cron every hour and I have the following configuration:

Regenerate the sitemaps during cron runs= TRUE.Sitemap generation interval= Once an hour.Maximum links in a sitemap= 2000.Sitemap generation max duration= 300.The use case here is a news website that adds about five or six articles a day and the ideal would be to regenerate the sitemap once a day to reflect those new articles. There are currently 15,851 entities being built in the sitemap. What is happening right now with these settings is that cron is taking roughly three runs (one hour apart) to finish the queue and always kicking the rebuild process off again on the next run.

If I change the

Sitemap generation intervalto Once a day, the same would be true but it would take three days for each queue to complete. But my expectation here would be more along the lines of -- the queue should only be started once a day (or whatever theSitemap generation intervalis) and should always be processed if it is incomplete. Currently, an in-process queue will only continue to execute at theSitemap generation interval.Does this make sense? I'm happy to submit a patch for it if so.

Comment #22

gbyte CreditAttribution: gbyte as a volunteer and at gbyte commented@wells This is indeed making a lot of sense; it's just I just rewrote this module and apparently did not think of everything. I would like to implement a method that will check if generation is in progress and use it in several places. It will check

QueueWorker::getRemainingElementCountand also thesimple_sitemap.queue_stashed_resultsstate. I think I would prefer me to take care of this.If you would like to help out, I would love to see the tests fixed for 3.x. These are important, otherwise we risk breaking everything on every commit. ;) #3000655: Fix tests for 3.x

Comment #23

slasher13With this patch:

Sitemap generation will finish in (number of cron runs) * (Sitemap generation max duration) and must be shorter than Sitemap generation interval.

Please try:

Comment #24

gbyte CreditAttribution: gbyte as a volunteer and at gbyte commented@slasher13

As I mentioned in the comment above yours, I intended to implement it. Fixed with #3000718: Respect cron_generate_interval only after full generation.

Comment #26

Manuel Garcia CreditAttribution: Manuel Garcia as a volunteer and at Appnovation for Pfizer, Inc. commentedI may be wrong, but it seems like this issue missed an upgrade path from

batch_process_limitsetting togenerate_duration, and now builds throw this error because of outdated configuration:Comment #27

gbyte CreditAttribution: gbyte as a volunteer and at gbyte commentedThere is no upgrade path as the value gets created automatically if it is not set. There must be something wrong with your installation, as the schema error you are getting should not be thrown due to batch_process_limit not being used anywhere.

Please create new issues for these kinds of problems (preferably as support request), otherwise everyone gets notified.

Comment #28

Manuel Garcia CreditAttribution: Manuel Garcia as a volunteer and at Appnovation for Pfizer, Inc. commentedNew value is fine, but since the old config wasn't removed, it's still in the exported config file, thus the error in the build. At least I think that's what happened.

In any case, it's not the end of the world since we can just remove it from the file. I just thought I'd mention it in case someone else ran into it.

Comment #29

gbyte CreditAttribution: gbyte as a volunteer and at gbyte commented@Manuel Garcia

You were right, the issue was fixed here: #3063870: Schema error after updating 2.x to 3.x.

Comment #30

Manuel Garcia CreditAttribution: Manuel Garcia as a volunteer and at Appnovation for Pfizer, Inc. commentedThanks @gbyte.co for the update, and great to see it fixed so quickly! :D